Jupyter notebook is an popular and excellent tool in data scientists. They can use it to create, verify and share model via their favorite programing language. Spark is a great software to support both batch and real time data analysis in big data area. Especially, it also supports all kinds of algorithms in the component “MLlib”. It will be amazing way to work in an integration environment for both jupyter and spark in machine learning world. Now, we will introduce 4 ways to integrate jupyter and spark.

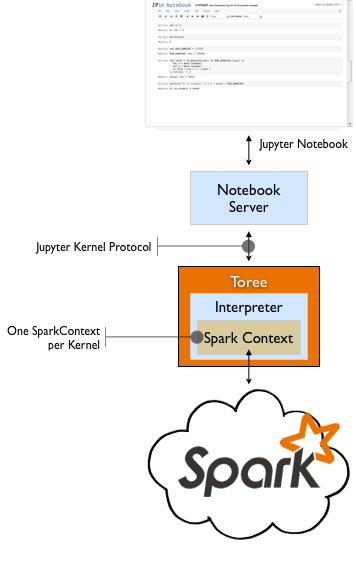

Using toree which is previously called “Spark Kernel”. (jupyter + toree)

When you are plan to use toree to run in jupyter server, you need to know the following limitations.

- spark/spark client need to be installed in this server.

- spark-submit is also kicked off at this server.

# install spark |

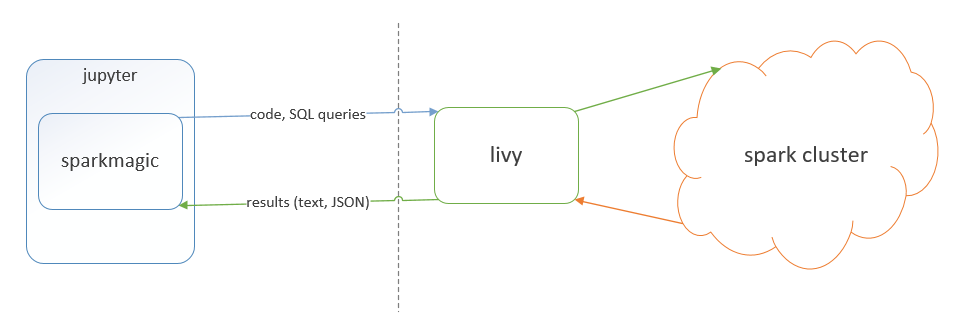

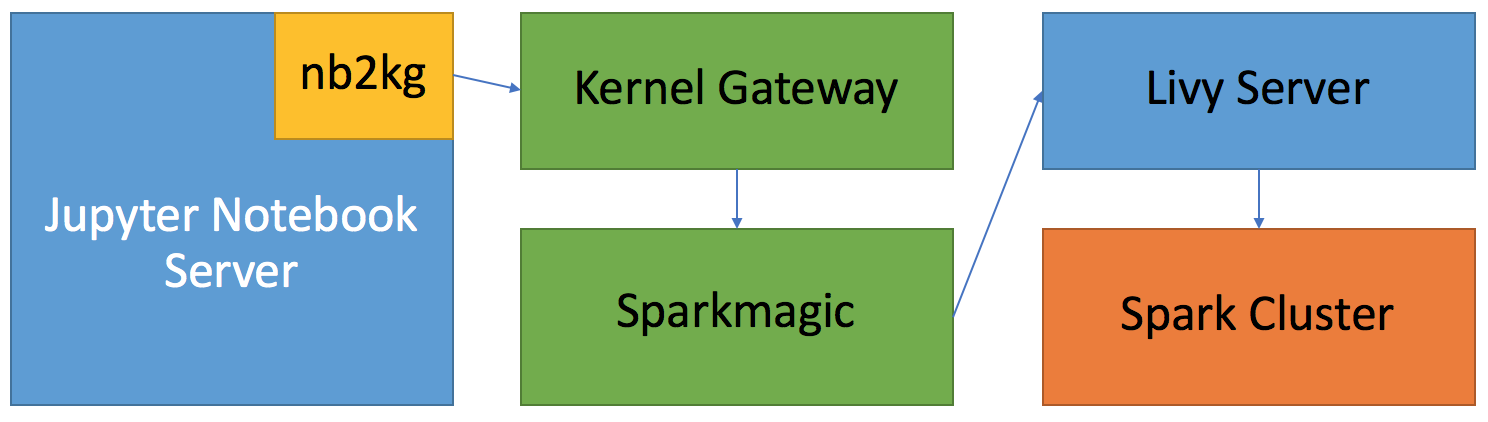

Using sparkmagic and livy (jupyter + sparkmagic + livy)

livy is a spark rest server. sparkmagic provides several kernels such as pyspark, pyspark3, sparkr for jupyter notebook by working with livy sever together. With this approach, you may know the following features.

- don’t need to install spark-client on juptyer server side

- shift the submitting of spark job from jupyter server side to livy side

# install sparkmagic |

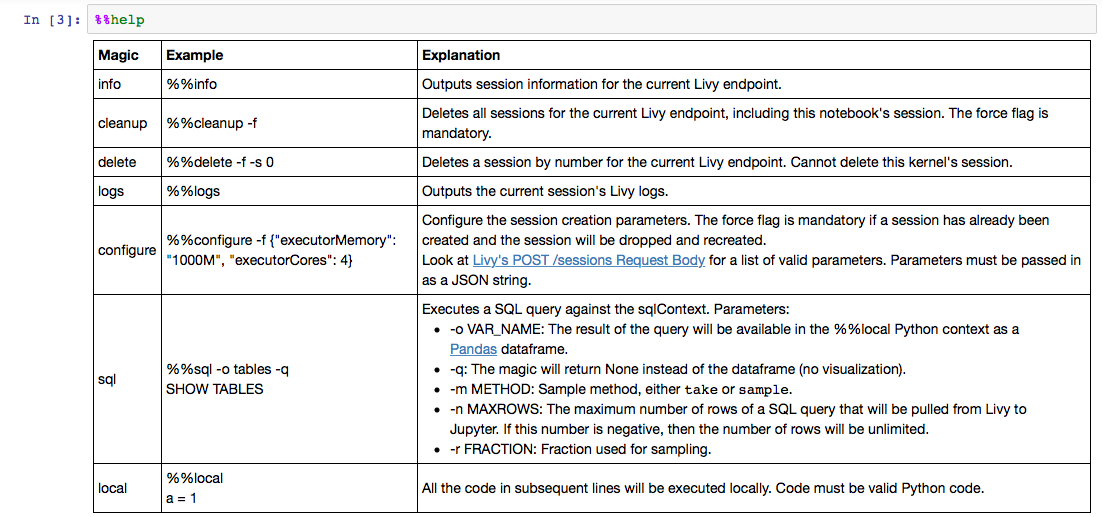

Notebook magic list in sparkmagic

The integration chart for sparkmagic and livy

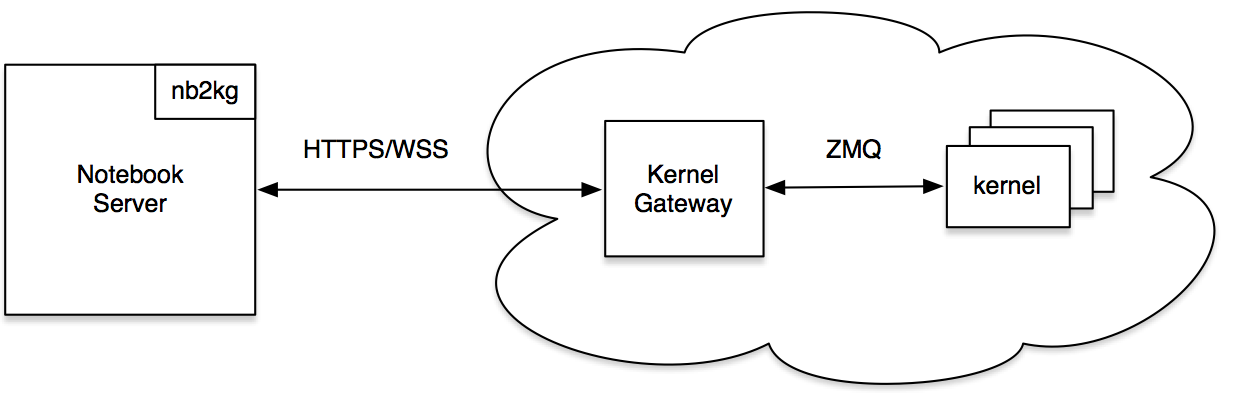

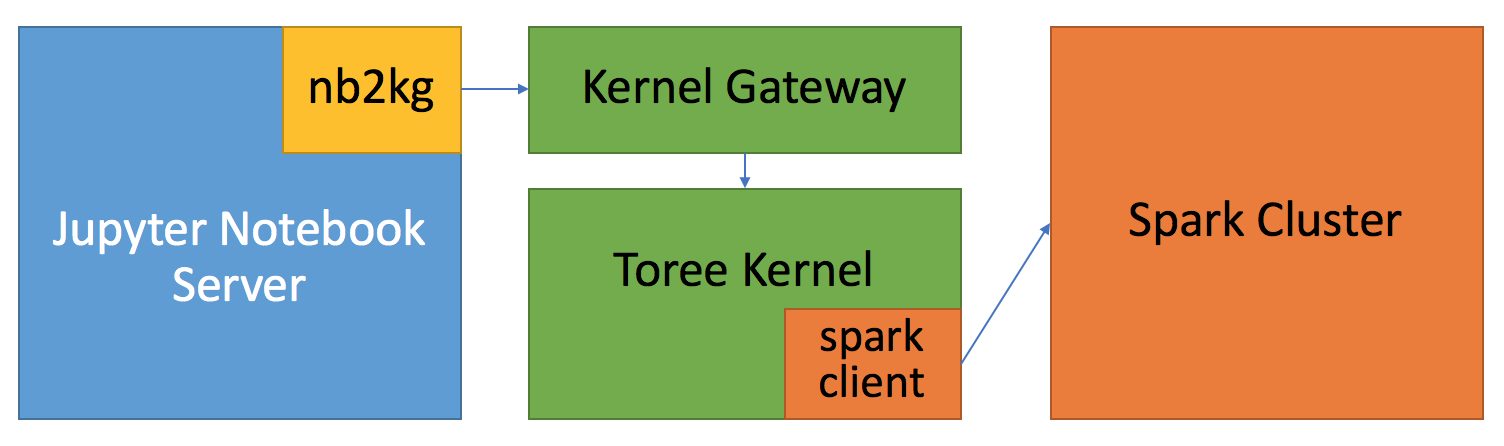

Using nb2kg, kernelgateway and toree (jupyter + nb2kg + kernelgateway + toree)

- spark/spark client need to be installed at kernelgateway side.

- spark-submit is ran at kernelgateway side.

Install nb2kg and run notebook server

# install nb2kg

pip install nb2kg

# register nb2kg

jupyter serverextension enable --py nb2kg --sys-prefix

# start jupyter notebook server

export KG_URL=http://kg-host:port

jupyter notebook \

--NotebookApp.session_manager_class=nb2kg.managers.SessionManager \

--NotebookApp.kernel_manager_class=nb2kg.managers.RemoteKernelManager \

--NotebookApp.kernel_spec_manager_class=nb2kg.managers.RemoteKernelSpecManager

# verify if nb2kg is enable.

jupyter serverextension list

nb2kg enabled

- Validating...

nb2kg OK

# uninstall nb2kg

jupyter serverextension disable --py nb2kg --sys-prefix

pip uninstall -y nb2kgInstall kernel gateway

# install from pypi |

- Install toree in the server where kernelgatway is installed.

The detailed steps, please refer to the previous section.

Using nb2kg, kernelgateway, sparkmagic and livy (jupyter + nb2kg + kernelgateway + sparkmagic + livy)

- do not need to install spark/spark client since we call spark by livy.

- shift the submitting of spark job from kernelgateway side to livy side.

The detailed steps, please refer to the upper sections.

An step by step example to use toree in jupyter.

- Install spark

export SPARK_HOME=/Users/luliang/Tools/spark-2.1.1-bin-hadoop2.7 |

- Install anaconda which includes jupyter and python.

# install anacoda |

- List all configuration information for jupyter.

jupyter --paths |

- Install toree.

pip install toree |

- List current available notebook servers.

jupyter notebook list |

Generate Configuration File under ~/.jupyter

# Writing default config to: /Users/luliang/.jupyter/jupyter_notebook_config.py

jupyter-notebook --generate-config(Optional) Generate password and set passwork for notebook

产生密码:终端输入ipython |

- (Optional) Configure notebook with https

# A self-signed certificate can be generated with openssl. |

- Now you can access Jupyter with this url: https://hdte.xxx.xxx.com:6666/

To avoid chaos, you can refer to this name changing list.

- Spark Kernel (old) ==> Toree (new)

- Ipython (old) ==> jupyter (new)

Refer to

- https://jupyter-kernel-gateway.readthedocs.io/en/latest/getting-started.html

- https://github.com/jupyter/kernel_gateway

- https://github.com/jupyter/nb2kg

- https://github.com/apache/incubator-toree

- http://toree.apache.org/docs/current/user/quick-start/

- https://github.com/jupyter-incubator/sparkmagic

Two examples for creating a kind of kernel for jupyter notebook.

- https://github.com/takluyver/bash_kernel/blob/0966dc102d7549f5c909c93de633a95b2af9f707/bash_kernel/kernel.py

- https://github.com/dsblank/simple_kernel

- Using toree which is previously called “Spark Kernel”. (jupyter + toree)

- Using sparkmagic and livy (jupyter + sparkmagic + livy)

- Using nb2kg, kernelgateway and toree (jupyter + nb2kg + kernelgateway + toree)

- Using nb2kg, kernelgateway, sparkmagic and livy (jupyter + nb2kg + kernelgateway + sparkmagic + livy)

- An step by step example to use toree in jupyter.

- Refer to